Centralized Radar Architecture Improves Perception Capabilities

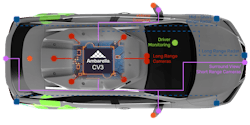

Edge AI (artificial intelligence) semiconductor company Ambarella Inc. has developed a centralized 4D imaging radar architecture which aims to improve the perception capabilities of autonomous driving systems. The technology enables central processing of raw radar data and low-level sensor fusion from other inputs, such as cameras, lidar and ultrasonics.

With these capabilities, greater environmental perception and safer path planning can be achieved for advanced driver assistance systems (ADAS) as well as various levels of autonomous driving for various applications.

READ MORE from our colleagues at Electronic Design: Blending Machine Learning and Radar for Enhanced Navigation

Moving Radar Beyond the Edge

According to Steven Hong, VP and General Manager of Radar Technology at Ambarella Inc., what makes the new technology unique is the fact no radar on the market today is processed centrally. “If you look at all the commercial radars that are deployed in [various] industries…typically all of the processing for radar lives inside of the radar sensor itself,” he said in an interview with Power & Motion.

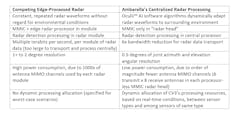

Traditional radar sensor modules contain all necessary components – the antennas as well as analog and digital processing – in a single package. However, the module can be on the larger size and requires the use of more antennas to achieve higher resolutions. “For a high-performance radar, you want to have hundreds if not thousands of antennas,” said Hong.

Doing so provides clearer images and longer range. He said the challenge is that as you add more antennas, more data is generated which can make it harder to move the data to a location where it can be processed.

He explains that today, camera data is typically processed centrally; there is no processing chip for camera data installed directly next to the camera. Instead, the camera data is sent to a more powerful processor elsewhere in the vehicle where it is processed along with other vehicle information. “But for radars, because there is so much data being generated – on the order of multiple terabytes – you can’t move the data anywhere unless you have processed it,” said Hong.

Radar technology is trending toward the generation of more data, forcing processing to remain at the edge, he said. However, this leads to constraints with the module. Increased processing requirements means there is a need to use more antennas and often a larger, thicker radar module which can generate more heat. All of this adds to the cost of the system and can create installation challenges for OEMs as there is often only so much space and heat dissipation capabilities possible, depending on the application.

Hong said this is why radar has lagged in performance compared to camera and lidar “because there is a ceiling on how much you can dissipate heat and how much cost and performance you can have in that envelope.”

To overcome these challenges, Ambarella developed its new radar architecture which enables high-resolution data collection to be achieved without the need to use several antennas.

READ MORE: Perception Systems Guide the Path to a Fully Autonomous Tractor

Software is a Key Enabler

Hong said the company was able to reduce the number of antennas necessary without compromising performance due to its software. In traditional radar designs, the use of multiple antennas enables effective measure of what is happening in the environment around a vehicle. “If you want a full picture, you need all the different measurements in order to complete that picture,” he explained.

Unlike traditional radar, Ambarella utilizes an intelligent waveform which adaptively learns from the environment using AI software algorithms. “We send out different information at different times,” said Hong. “This allows us with very few antennas to still capture the whole scene.”

As Ambarella explained in its press release announcing the launch of its new radar architecture, by applying AI software to dynamically adapt the radar waveforms generated with existing monolithic microwave integrated circuit (MMIC) devices, and using AI sparsification to create virtual antennas, its Oculii technology reduces the antenna array for each processor-less MMIC radar head in this new architecture to six transmit x eight receive.

The software algorithms are optimized to run on Ambarella’s CV3 AI domain controller SoC (system on a chip) family. As such, higher resolutions and performance can be achieved – high angular resolution of 0.5 degrees, ultra-dense point cloud up to 10s of thousands of points per frame and detection range up to 500+ m.

The CV3 is designed to process both radar and camera data, which is a unique capability in the industry said Hong. As such, low-level sensor fusion is possible. Most systems today process radar and camera data on separate chips and use a third chip to combine information from both. This can unfortunately cause necessary information to be filtered out. But with sensor fusion, this loss of information is minimized.

Radar and camera operate in different wavelengths at different frequencies, said Hong. Radar for instance can see much further and naturally sense speed. Being able to combine data from both on a single processing chip enables much richer information to be collected than if done separately. “We believe this is something that is really going to open up the performance of these perception systems in autonomous vehicles and robots,” he said.

Benefits to a Centralized Architecture

By reducing the number of antennas and processing data on a single chip, “we are able to really allow radar to take advantage of the same things that camera has taken advantage of for many years, centralizing the data,” said Hong.

“Centralizing the processing allows us to shift the compute around the different parts of the vehicle and different sensors depending on what we need,” he explained. For instance, if a vehicle is driving down the highway it will want to see as far in front of it as possible to prevent collisions with objects in the road. But then if the vehicle is in a parking lot, seeing what is directly in front of or around it will be more important than seeing long distances.

The advantage of a centralized architecture over an edge-based one is the sensing system can more easily shift between the types of environments and processing necessary to ensure safe vehicle operation. And this can be achieved without making it overly complicated or expensive.

Processing data in a centralized location also enables the radar sensor itself to be smaller, thinner and consume less power because processing is no longer taking place within the module. This reduces the amount of installation space needed for the radar and allows for more flexibility in where it is installed.

With the centralized architecture and Ambarella’s technology, Hong said more detailed information can be achieved. He noted that radar data is typically blurry and it may be difficult to really know what is being detected. But with Ambarella’s new architecture there is improved clarity and resolution which he said has been unparalleled before with radar and on par with current lidar technology.

Hong said in the long run the company’s technology could help radar be a better option than lidar because Ambarella’s computing capabilities allow higher resolutions and longer range with each generation of its technology. Another advantage is the lower cost of production offered by radar compared to lidar.

He noted Ambarella also uses existing hardware which has been deployed in vehicles around the world for several years, ensuring its reliability and robustness in various applications. While passenger vehicles are one of the largest segments today for perception and radar technology, Hong said the company is targeting a range of applications with its new centralized radar architecture. These include heavy-duty trucks and off-road machinery as well as autonomous mobile robots (AMR) and automated guided vehicles (AGV).

READ MORE: Autonomous Mobile Robots on an Upward Trajectory

“We believe this is the right combination of sensors as well as compute that will bring safety and autonomy to all vehicles,” he concluded.